...in the beginning:

Performance online has been a consideration for premier brands since the commercialization of the Internet in mid-90s. During these early days, best practices were network and infrastructure focused. This meant connecting directly to the backbone networks where large concentrations of your users originated from and oversizing your Data Centers to support unanticipated peak traffic. For this reason, HSPs (Hosting Service Providers) evolved to enable these best practices for smaller to midsize companies. Ensuring your data center or hosted infrastructure is well connected, well managed, and uses current hardware/software are clearly still best practices today.

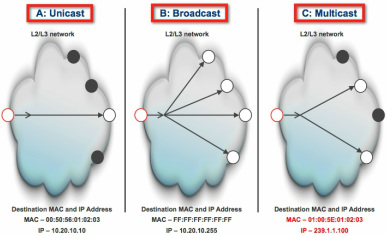

The 1st significant effort to distinguish between web and video delivery grew from the recognition that live video traffic would be the most demanding load particularly because video files are large by contrast and demand for live events can be wildly unpredictable. IP Multicast established a highly efficient routing tree structure for serving a single source to many users (1 to many distribution) in order to reduce this type of traffic load on network resources. Without IP Multicast, 2 requests for the same live content from a given destination network generates 2 times the file load across a network but with Multicast in place, the requests generate only a single load. For very popular content it is a highly effective technical solution yet has been extremely slow in adoption. To this day, it is not widely used outside of individual networks due to a combination of commercial and technical concerns (settlement agreements, load, reliability, etc...). It is considered a best practice within MVPD networks (Multi-channel Video Programming Distributors for Linear Programming and within Enterpriese WANs.

In the mid-late 90's, CDN (Content Delivery Network) Technology which leveraged a network of distributed Reverse Proxy servers (cache servers in front of host servers) began to demonstrate performance gains by avoiding bottlenecks at Internet connection points, reducing round trip delay and providing a pool of server capacity to absorb flash crowds. Although the original CDNs were focused on either Web or Audio/Video streams (Sand Piper 1997 now Level 3, Akamai 1998 supported Websites and Audionet/Broadcast.com 1995 now Yahoo, InterVu 1996 now Akamai, and Microcast 1998 supported only streams) over time this distinction became blurred at least superficially (particularly after Akamai's acquisition of InterVu in 2000).

As many new companies joined the CDN fray, competition drove continued innovation within the CDN industry. Different architectures evolved early on (1999/2000):

< Many smaller servers deeply deployed @ higher price points (Akamai)

< Fewer larger servers more efficiently deployed @ lower price points (Everyone Else)

< CDN Federation among Backbone/ISPs (collaborative approach @ lowest price points... slow adoption for reasons similar to multicast)

As many new companies joined the CDN fray, competition drove continued innovation within the CDN industry. Different architectures evolved early on (1999/2000):

< Many smaller servers deeply deployed @ higher price points (Akamai)

< Fewer larger servers more efficiently deployed @ lower price points (Everyone Else)

< CDN Federation among Backbone/ISPs (collaborative approach @ lowest price points... slow adoption for reasons similar to multicast)

CDNs were effective when serving static content to many users (1 to many) but as more content became dynamically generated, interest developed to serve content that was more dynamic from CDN proxies.

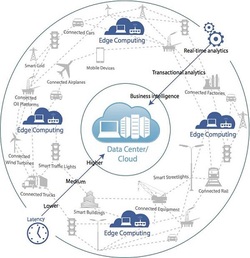

Akamai in conjunction with other CDNs, introduced a markup language (Edge Side Includes) to the World Wide Web Consortium in 2001 as a means of assembling the template for dynamic pages in proxies signaling a shift away from the focus on Network and Infrastructure towards higher layer functional integration with the application and Edge Computing. For many applications, client side technology has reduced the need for edge computing however there are instances particularly for applications where client devices are underpowered, in parts of the world where older devices are common, or when requirements specify too much client side processing for current devices where edge computing is still a best practice.

Akamai in conjunction with other CDNs, introduced a markup language (Edge Side Includes) to the World Wide Web Consortium in 2001 as a means of assembling the template for dynamic pages in proxies signaling a shift away from the focus on Network and Infrastructure towards higher layer functional integration with the application and Edge Computing. For many applications, client side technology has reduced the need for edge computing however there are instances particularly for applications where client devices are underpowered, in parts of the world where older devices are common, or when requirements specify too much client side processing for current devices where edge computing is still a best practice.

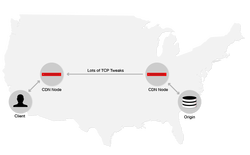

The next big network/infrastructure based performance improvement came with DSA (Dynamic Site Acceleration) from Netli in 2006 (later acquired by Akamai) and then a more refined version from Cotendo in 2009 where a series of network and server optimizations are applied. Enhancements to TCP, Routing Paths, Connection Management, On the fly Compression, SSL Offload, and Prefetching are commonly among the applied treatments.

As networking and infrastructure was becoming more mature, the software community took up the mantle of performance and began showing results in the mid-2000's with two parallel efforts ...Web2.0 and FEO (Front End Optimization).

Since the mid 90's software companies had been working on methods to offload server side assembly and dynamic content generation but due to competing standards the efforts didn't get any traction until the mid-2000s when the Javascript/Ajax revolutionized site design. Web 2.0's contribution to web performance is that it makes page rendering more efficient, greatly reducing the amount of traffic required to support dynamic content as well as providing an alternative means to offload processing from the server and distribute the processing load.

Since the mid 90's software companies had been working on methods to offload server side assembly and dynamic content generation but due to competing standards the efforts didn't get any traction until the mid-2000s when the Javascript/Ajax revolutionized site design. Web 2.0's contribution to web performance is that it makes page rendering more efficient, greatly reducing the amount of traffic required to support dynamic content as well as providing an alternative means to offload processing from the server and distribute the processing load.

On a concurrent track, the largest Web Sites began funding work on performance research in the early 2000s, creating an awareness of how different coding techniques impact performance. By the mid 2000's these efforts are getting broader attention and by 2007 Steve Souders published his seminal work "High Performing Web Sites" based upon observations made during his years with Yahoo, detailing where various coding alternatives are more efficient than others.

Together Web 2.0 and FEO marks a shift where the most significant improvements in performance are driven by site developers centered around application enhancements. Use of both technologies are considered Best Practices.

Together Web 2.0 and FEO marks a shift where the most significant improvements in performance are driven by site developers centered around application enhancements. Use of both technologies are considered Best Practices.

In 2011 Cotendo released an Edge Computing framework to enable execution of lightweight logic on edge devices which extended the functionality of dynamic content beyond ESI and in 2012/2013, Fastly made available an extensible caching platform based upon Varnish which also allows executing decisions on edge devices within a more open platform. Varnish also supports ESI markup. Edge Computing may also be implemented via cloud infrastructure.

Edge Computing is valuable where at least some of the following conditions exist:

1). High degree of Real Time Interactivity

2). Real-Time Response for Dynamic Content must be 1 second or less

3). Processing can use locally available data

4). Database Synchronization does not need be immediate

5). Database Synchronization is not necessary

Applications such as AR/VR (Augmented/Virtual Reality), Gaming, Interactive TV, IoT (Internet of Things), etc... are interesting candidates. For some niche applications, Edge Computing is an evolving best practice.

Edge Computing is valuable where at least some of the following conditions exist:

1). High degree of Real Time Interactivity

2). Real-Time Response for Dynamic Content must be 1 second or less

3). Processing can use locally available data

4). Database Synchronization does not need be immediate

5). Database Synchronization is not necessary

Applications such as AR/VR (Augmented/Virtual Reality), Gaming, Interactive TV, IoT (Internet of Things), etc... are interesting candidates. For some niche applications, Edge Computing is an evolving best practice.

Video Performance comes of age:

Between 2007-2010, several technologies began to take shape which would eventually evolve into a de-facto standard for video delivery allowing innovators to focus on performance (ABR, HTML5 & H.264 codec). Prior to this, competing protocols from companies such as Progressive/Real Networks, Microsoft, Apple and Adobe inhibited 3rd party development.

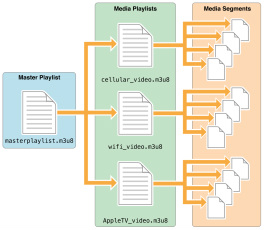

The modern era of video delivery was introduced In 2007 by Move Networks with ABR (Adaptive Bit Rate) technology which provides functionality in the player that adjusts video quality with changing network conditions. The usual suspects created their own versions of ABR (HLS, HDS, and Smooth) along with Netflix for their own use. But in 2010, Apple dropped support for Flash in their wildly popular iOS devices, ensuring a future for HLS vs. other ABR variants.

Currently, there is uncertainty about the future of standards: ABR (HLS or DASH); Codecs (H.264, H.265 or VP9) as well as a variety of newer performance oriented technologies some of which are ready for prime time while others are important to watch and observe as they mature. For the most current view of Best Practices for Video Performance, we maintain a list various techniques.

(Updated June 2018)

The modern era of video delivery was introduced In 2007 by Move Networks with ABR (Adaptive Bit Rate) technology which provides functionality in the player that adjusts video quality with changing network conditions. The usual suspects created their own versions of ABR (HLS, HDS, and Smooth) along with Netflix for their own use. But in 2010, Apple dropped support for Flash in their wildly popular iOS devices, ensuring a future for HLS vs. other ABR variants.

Currently, there is uncertainty about the future of standards: ABR (HLS or DASH); Codecs (H.264, H.265 or VP9) as well as a variety of newer performance oriented technologies some of which are ready for prime time while others are important to watch and observe as they mature. For the most current view of Best Practices for Video Performance, we maintain a list various techniques.

(Updated June 2018)